Image by Author

Large language models or LLMs have emerged as a driving catalyst in natural language processing. Their use-cases range from chatbots and virtual assistants to content generation and translation services. However, they have become one of the fastest-growing fields in the tech world – and we can find them all over the place.

As the need for more powerful language models grows, so does the need for effective optimization techniques.

However,many natural questions emerge:

How to improve their knowledge?

How to improve their general performance?

How to scale these models up?

The insightful presentation titled “A Survey of Techniques for Maximizing LLM Performance” by John Allard and Colin Jarvis from OpenAI DevDay tried to answer these questions. If you missed the event, you can catch the talk on YouTube.

This presentation provided an excellent overview of various techniques and best practices for enhancing the performance of your LLM applications. This article aims to summarize the best techniques to improve both the performance and scalability of our AI-powered solutions.

Understanding the Basics

LLMs are sophisticated algorithms engineered to understand, analyze, and produce coherent and contextually appropriate text. They achieve this through extensive training on vast amounts of linguistic data covering diverse topics, dialects, and styles. Thus, they can understand how human-language works.

However, when integrating these models in complex applications, there are some key challenges to consider:

Key Challenges in Optimizing LLMs

- LLMs Accuracy: Ensuring that LLMs output is accurate and reliable information without hallucinations.

- Resource Consumption: LLMs require substantial computational resources, including GPU power, memory and big infrastructure.

- Latency: Real-time applications demand low latency, which can be challenging given the size and complexity of LLMs.

- Scalability: As user demand grows, ensuring the model can handle increased load without degradation in performance is crucial.

Strategies for a Better Performance

The first question is about “How to improve their knowledge?”

Creating a partially functional LLM demo is relatively easy, but refining it for production requires iterative improvements. LLMs may need help with tasks needing deep knowledge of specific data, systems, and processes, or precise behavior.

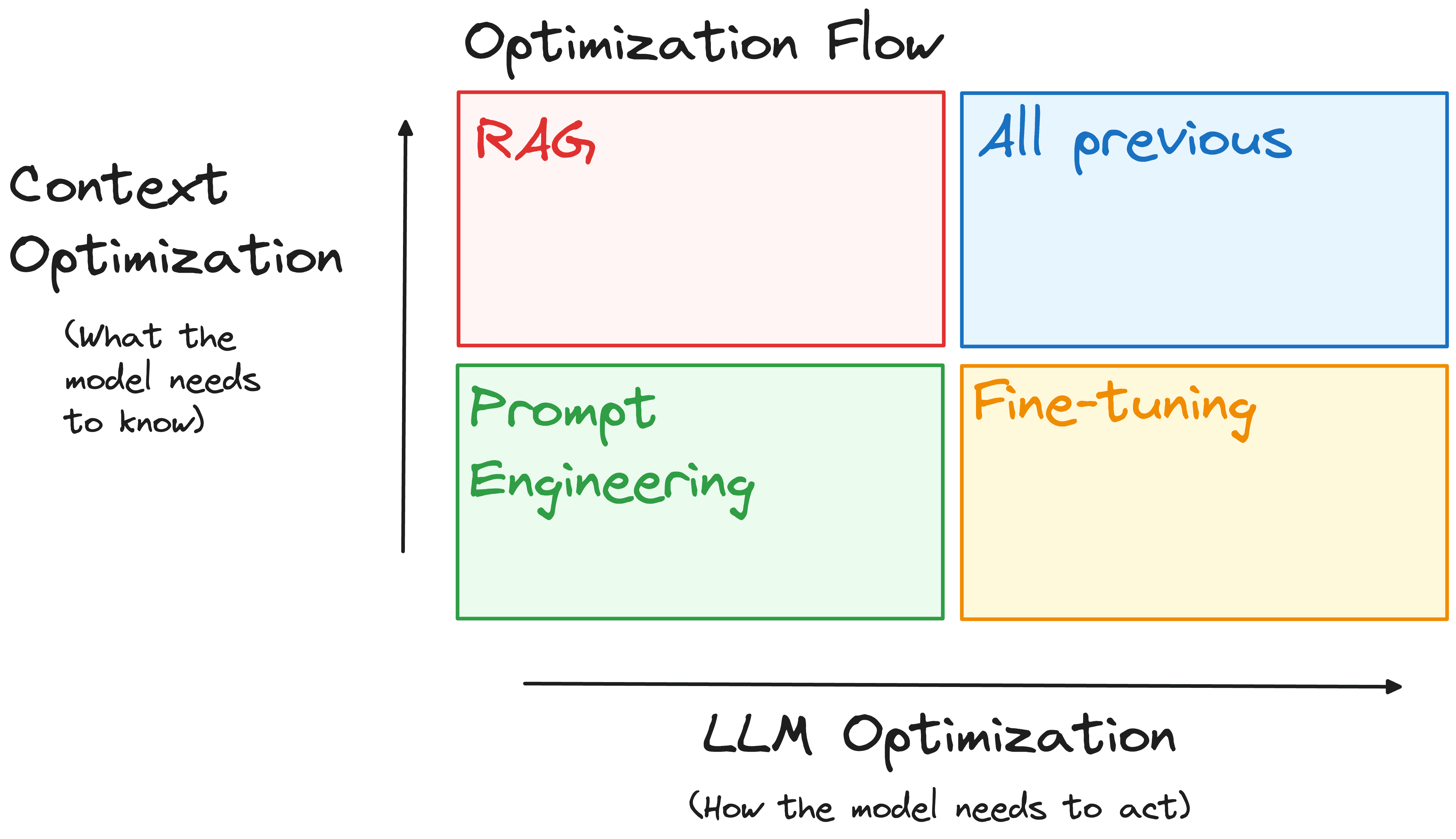

Teams use prompt engineering, retrieval augmentation, and fine-tuning to address this. A common mistake is to assume that this process is linear and should be followed in a specific order. Instead, it is more effective to approach it along two axes, depending on the nature of the issues:

- Context Optimization: Are the problems due to the model lacking access to the right information or knowledge?

- LLM Optimization: Is the model failing to generate the correct output, such as being inaccurate or not adhering to a desired style or format?

Image by Author

To address these challenges, three primary tools can be employed, each serving a unique role in the optimization process:

Prompt Engineering

Tailoring the prompts to guide the model’s responses. For instance, refining a customer service bot’s prompts to ensure it consistently provides helpful and polite responses.

Retrieval-Augmented Generation (RAG)

Enhancing the model’s context understanding through external data. For example, integrating a medical chatbot with a database of the latest research papers to provide accurate and up-to-date medical advice.

Fine-Tuning

Modifying the base model to better suit specific tasks. Just like fine-tuning a legal document analysis tool using a dataset of legal texts to improve its accuracy in summarizing legal documents.

The process is highly iterative, and not every technique will work for your specific problem. However, many techniques are additive. When you find a solution that works, you can combine it with other performance improvements to achieve optimal results.

Strategies for an Optimized Performance

The second question is about “How to improve their general performance?”

After having an accurate model, a second concerning point is the Inference time. Inference is the process where a trained language model, like GPT-3, generates responses to prompts or questions in real-world applications (like a chatbot).

It is a critical stage where models are put to the test, generating predictions and responses in practical scenarios. For big LLMs like GPT-3, the computational demands are enormous, making optimization during inference essential.

Consider a model like GPT-3, which has 175 billion parameters, equivalent to 700GB of float32 data. This size, coupled with activation requirements, necessitates significant RAM. This is why Running GPT-3 without optimization would require an extensive setup.

Some techniques can be used to reduce the amount of resources required to execute such applications:

Model Pruning

It involves trimming non-essential parameters, ensuring only the crucial ones to performance remain. This can drastically reduce the model’s size without significantly compromising its accuracy.

Which means a significant decrease in the computational load while still having the same accuracy. You can find easy-to-implement pruning code in the following GitHub.

Quantization

It is a model compression technique that converts the weights of a LLM from high-precision variables to lower-precision ones. This means we can reduce the 32-bit floating-point numbers to lower precision formats like 16-bit or 8-bit, which are more memory-efficient. This can drastically reduce the memory footprint and improve inference speed.

LLMs can be easily loaded in a quantized manner using HuggingFace and bitsandbytes. This allows us to execute and fine-tune LLMs in lower-power resources.

from transformers import AutoModelForSequenceClassification, AutoTokenizer

import bitsandbytes as bnb

# Quantize the model using bitsandbytes

quantized_model = bnb.nn.quantization.Quantize(

model,

quantization_dtype=bnb.nn.quantization.quantization_dtype.int8

)

Distillation

It is the process of training a smaller model (student) to mimic the performance of a larger model (also referred to as a teacher). This process involves training the student model to mimic the teacher’s predictions, using a combination of the teacher’s output logits and the true labels. By doing so, we can a achieve similar performance with a fraction of the resource requirement.

The idea is to transfer the knowledge of larger models to smaller ones with simpler architecture. One of the most known examples is Distilbert.

This model is the result of mimicking the performance of Bert. It is a smaller version of BERT that retains 97% of its language understanding capabilities while being 60% faster and 40% smaller in size.

Techniques for Scalability

The third question is about “How to scale these models up?”

This step is often crucial. An operational system can behave very differently when used by a handful of users versus when it scales up to accommodate intensive usage. Here are some techniques to address this challenge:

Load-balancing

This approach distributes incoming requests efficiently, ensuring optimal use of computational resources and dynamic response to demand fluctuations. For instance, to offer a widely-used service like ChatGPT across different countries, it is better to deploy multiple instances of the same model.

Effective load-balancing techniques include:

Horizontal Scaling: Add more model instances to handle increased load. Use container orchestration platforms like Kubernetes to manage these instances across different nodes.

Vertical Scaling: Upgrade existing machine resources, such as CPU and memory.

Sharding

Model sharding distributes segments of a model across multiple devices or nodes, enabling parallel processing and significantly reducing latency. Fully Sharded Data Parallelism (FSDP) offers the key advantage of utilizing a diverse array of hardware, such as GPUs, TPUs, and other specialized devices in several clusters.

This flexibility allows organizations and individuals to optimize their hardware resources according to their specific needs and budget.

Caching

Implementing a caching mechanism reduces the load on your LLM by storing frequently accessed results, which is especially beneficial for applications with repetitive queries. Caching these frequent queries can significantly save computational resources by eliminating the need to repeatedly process the same requests over.

Additionally, batch processing can optimize resource usage by grouping similar tasks.

Conclusion

For those building applications reliant on LLMs, the techniques discussed here are crucial for maximizing the potential of this transformative technology. Mastering and effectively applying strategies to a more accurate output of our model, optimize its performance, and allowing scaling up are essential steps in evolving from a promising prototype to a robust, production-ready model.

To fully understand these techniques, I highly recommend getting a deeper detail and starting to experiment with them in your LLM applications for optimal results.

Josep Ferrer is an analytics engineer from Barcelona. He graduated in physics engineering and is currently working in the data science field applied to human mobility. He is a part-time content creator focused on data science and technology. Josep writes on all things AI, covering the application of the ongoing explosion in the field.