Image by Editor | Midjourney

Bayesian thinking is a way to make decisions using probability. It starts with initial beliefs (priors) and changes them when new evidence comes in (posterior). This helps in making better predictions and decisions based on data. It’s crucial in fields like AI and statistics where accurate reasoning is important.

Fundamentals of Bayesian Theory

Key terms

- Prior Probability (Prior): Represents the initial belief about the hypothesis.

- Likelihood: Measures how well the hypothesis explains the evidence.

- Posterior Probability (Posterior): Combines the prior probability and the likelihood.

- Evidence: Updates the probability of the hypothesis.

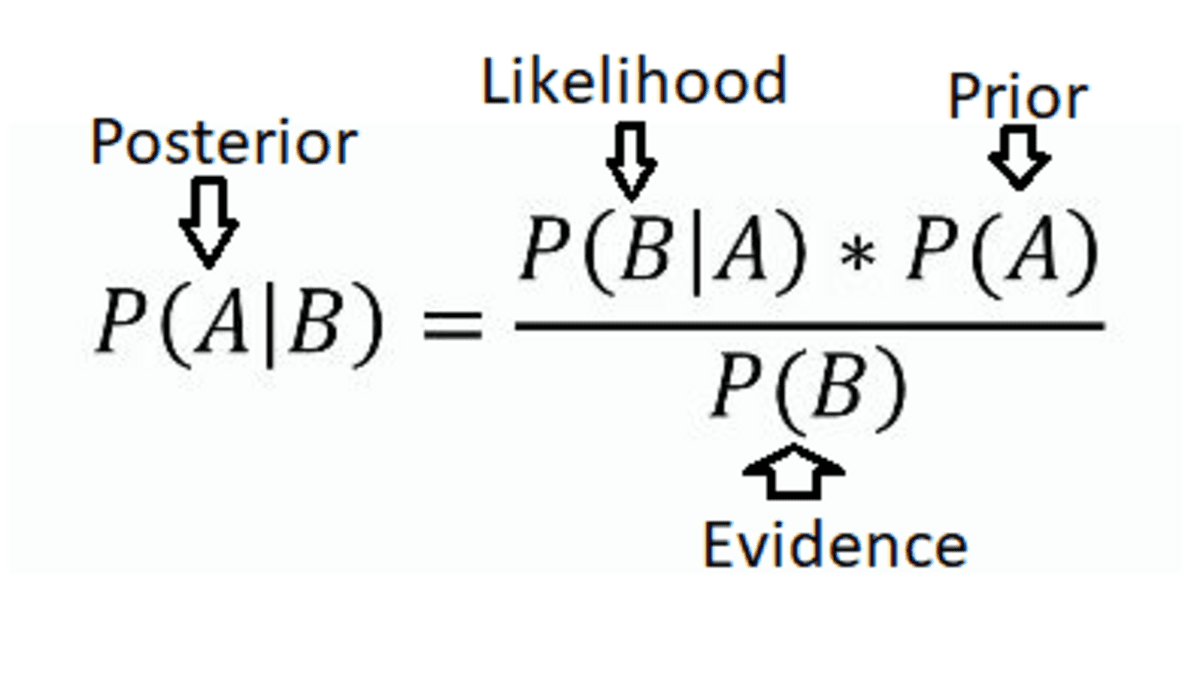

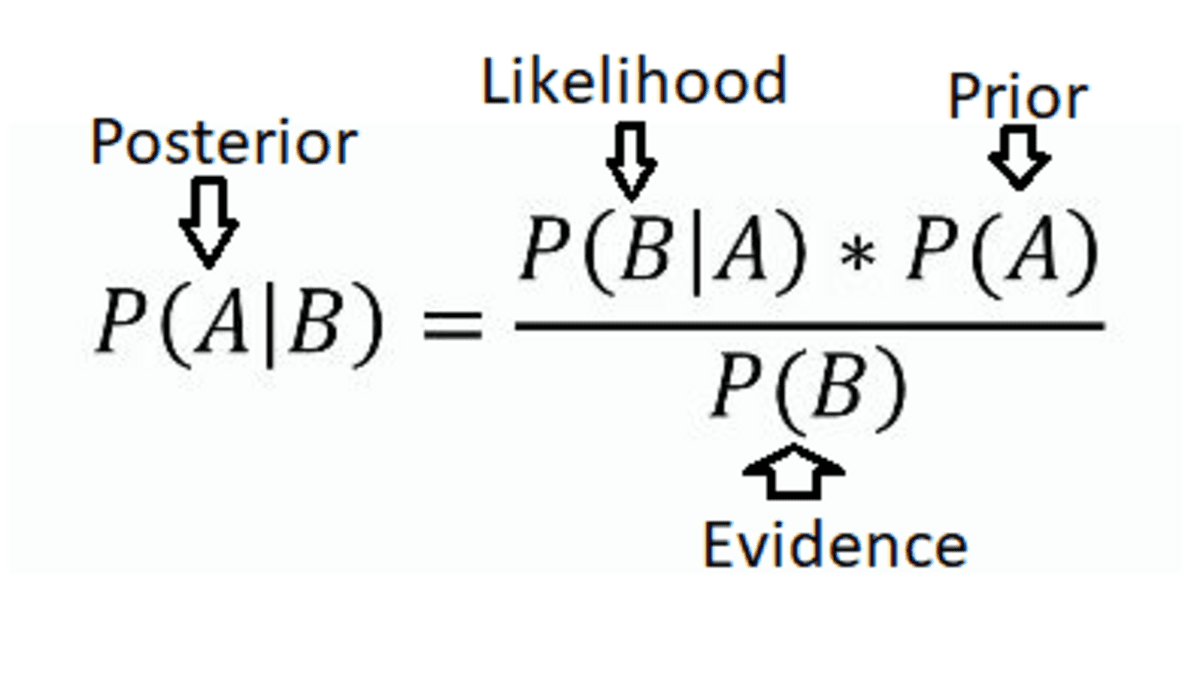

Bayes’ Theorem

This theorem describes how to update the probability of a hypothesis based on new information. It is mathematically expressed as:

Bayes’ Theorem (Source: Eric Castellanos Blog)

Bayes’ Theorem (Source: Eric Castellanos Blog)where:

P(A|B) is the posterior probability of the hypothesis.

P(B|A) is he likelihood of the evidence given the hypothesis.

P(A) is the prior probability of the hypothesis.

P(B) is the total probability of the evidence.

Applications of Bayesian Methods in Data Science

Bayesian Inference

Bayesian inference updates beliefs when things are uncertain. It uses Bayes’ theorem to adjust initial beliefs based on new information. This approach combines what’s known before with new data effectively. This approach quantifies uncertainty and adjusts probabilities accordingly. In this way, it continuously improves predictions and understanding as more evidence is gathered. It’s useful in decision-making where uncertainty needs to be managed effectively.

Example: In clinical trials, Bayesian methods estimate the effectiveness of new treatments. They combine prior beliefs from past studies or with current data. This updates the probability of how well the treatment works. Researchers can then make better decisions using old and new information.

Predictive Modeling and Uncertainty Quantification

Predictive modeling and uncertainty quantification involve making predictions and understanding how confident we are in those predictions. It uses techniques like Bayesian methods to account for uncertainty and provide probabilistic forecasts. Bayesian modeling is effective for predictions because it manages uncertainty. It doesn’t just predict outcomes but also indicates our confidence in those predictions. This is achieved through posterior distributions, which quantify uncertainty.

Example: Bayesian regression predicts stock prices by offering a range of possible prices rather than a single prediction. Traders use this range to avoid risk and make investment choices.

Bayesian Neural Networks

Bayesian neural networks (BNNs) are neural networks that provide probabilistic outputs. They offer predictions along with measures of uncertainty. Instead of fixed parameters, BNNs use probability distributions for weights and biases. This allows BNNs to capture and propagate uncertainty through the network. They are beneficial for tasks requiring uncertainty measurement and decision-making. They are used in classification and regression.

Example: In fraud detection, Bayesian networks analyze relationships between variables like transaction history and user behavior to spot unusual patterns linked to fraud. They improve the accuracy of fraud detection systems as compared to traditional approaches.

Tools and Libraries for Bayesian Analysis

Several tools and libraries are available to implement Bayesian methods effectively. Let’s get to know about some popular tools.

PyMC4

It is a library for probabilistic programming in Python. It helps with Bayesian modeling and inference. It builds on the strengths of its predecessor, PyMC3. It introduces significant improvements through its integration with JAX. JAX offers automatic differentiation and GPU acceleration. This makes Bayesian models faster and more scalable.

Stan

A probabilistic programming language implemented in C++ and available through various interfaces (RStan, PyStan, CmdStan, etc.). Stan excels in efficiently performing HMC and NUTS sampling and is known for its speed and accuracy. It also includes extensive diagnostics and tools for model checking.

TensorFlow Probability

It is a library for probabilistic reasoning and statistical analysis in TensorFlow. TFP provides a range of distributions, bijectors, and MCMC algorithms. Its integration with TensorFlow ensures efficient execution on diverse hardware. It allows users to seamlessly combine probabilistic models with deep learning architectures. This article helps in robust and data-driven decision-making.

Let’s look at an example of Bayesian Statistics using PyMC4. We will see how to implement Bayesian linear regression.

import pymc as pm

import numpy as np

# Generate synthetic data

np.random.seed(42)

X = np.linspace(0, 1, 100)

true_intercept = 1

true_slope = 2

y = true_intercept + true_slope * X + np.random.normal(scale=0.5, size=len(X))

# Define the model

with pm.Model() as model:

# Priors for unknown model parameters

intercept = pm.Normal("intercept", mu=0, sigma=10)

slope = pm.Normal("slope", mu=0, sigma=10)

sigma = pm.HalfNormal("sigma", sigma=1)

# Likelihood (sampling distribution) of observations

mu = intercept + slope * X

likelihood = pm.Normal("y", mu=mu, sigma=sigma, observed=y)

# Inference

trace = pm.sample(2000, return_inferencedata=True)

# Summarize the results

print(pm.summary(trace))

Now, let’s understand the code above step-by-step.

- It sets initial beliefs (priors) for the intercept, slope, and noise.

- It defines a likelihood function based on these priors and the observed data.

- The code uses Markov Chain Monte Carlo (MCMC) sampling to generate samples from the posterior distribution.

- Finally, it summarizes the results to show estimated parameter values and uncertainties.

Wrapping Up

Bayesian methods combine prior beliefs with new evidence for informed decision-making. They improve predictive accuracy and manage uncertainty in several domains. Tools like PyMC4, Stan, and TensorFlow Probability provide robust support for Bayesian analysis. These tools help in making probabilistic predictions from complex data.

Jayita Gulati is a machine learning enthusiast and technical writer driven by her passion for building machine learning models. She holds a Master’s degree in Computer Science from the University of Liverpool.