Imagine if you could automate the tedious task of analyzing earnings reports, extracting key insights, and making informed recommendations—all without lifting a finger. In this article, we’ll walk you through how to create a multi-agent system using OpenAI’s Swarm framework, designed to handle these exact tasks. You’ll learn how to set up and orchestrate three specialized agents: one to summarize earnings reports, another to analyze sentiment, and a third to generate actionable recommendations. By the end of this tutorial, you’ll have a scalable, modular solution to streamline financial analysis, with potential applications beyond just earnings reports.

Learning Outcomes

- Understand the fundamentals of OpenAI’s Swarm framework for multi-agent systems.

- Learn how to create agents for summarizing, sentiment analysis, and recommendations.

- Explore the use of modular agents for earnings report analysis.

- Securely manage API keys using a .env file.

- Implement a multi-agent system to automate earnings report processing.

- Gain insights into real-world applications of multi-agent systems in finance.

- Set up and execute a multi-agent workflow using OpenAI’s Swarm framework.

This article was published as a part of the Data Science Blogathon.

What is OpenAI’s Swarm?

Swarm is a lightweight, experimental framework from OpenAI that focuses on multi-agent orchestration. It allows us to coordinate multiple agents, each handling specific tasks, like summarizing content, performing sentiment analysis, or recommending actions. In our case, we’ll design three agents:

- Summary Agent: Provides a concise summary of the earnings report.

- Sentiment Agent: Analyzes sentiment from the report.

- Recommendation Agent: Recommends actions based on sentiment analysis.

Use Cases and Benefits of Multi-Agent Systems

You can expand the multi-agent system built here for various use cases.

- Portfolio Management: Automate monitoring of multiple company reports and suggest portfolio changes based on sentiment trends.

- News Summarization for Finance: Integrate real-time news feeds with these agents to detect potential market movements early.

- Sentiment Tracking: Use sentiment analysis to predict stock movements or crypto trends based on positive or negative market news.

By splitting tasks into modular agents, you can reuse individual components across different projects, allowing for flexibility and scalability.

Step 1: Setting Up Your Project Environment

Before we dive into coding, it’s essential to lay a solid foundation for the project. In this step, you’ll create the necessary folders and files and install the required dependencies to get everything running smoothly.

mkdir earnings_report

cd earnings_report

mkdir agents utils

touch main.py agents/__init__.py utils/__init__.py .gitignoreInstall Dependencies

pip install git+https://github.com/openai/swarm.git openai python-dotenvStep 2: Store Your API Key Securely

Security is key, especially when working with sensitive data like API keys. This step will guide you on how to store your OpenAI API key securely using a .env file, ensuring your credentials are safe and sound.

OPENAI_API_KEY=your-openai-api-key-here

This ensures your API key is not exposed in your code.

Step 3: Implement the Agents

Now, it’s time to bring your agents to life! In this step, you’ll create three separate agents: one for summarizing the earnings report, another for sentiment analysis, and a third for generating actionable recommendations based on the sentiment.

Summary Agent

The Summary Agent will extract the first 100 characters of the earnings report as a summary.

Create agents/summary_agent.py:

from swarm import Agent

def summarize_report(context_variables):

report_text = context_variables["report_text"]

return f"Summary: {report_text[:100]}..."

summary_agent = Agent(

name="Summary Agent",

instructions="Summarize the key points of the earnings report.",

functions=[summarize_report]

)

Sentiment Agent

This agent will check if the word “profit” appears in the report to determine if the sentiment is positive.

Create agents/sentiment_agent.py:

from swarm import Agent

def analyze_sentiment(context_variables):

report_text = context_variables["report_text"]

sentiment = "positive" if "profit" in report_text else "negative"

return f"The sentiment of the report is: {sentiment}"

sentiment_agent = Agent(

name="Sentiment Agent",

instructions="Analyze the sentiment of the report.",

functions=[analyze_sentiment]

)

Recommendation Agent

Based on the sentiment, this agent will suggest “Buy” or “Hold”.

Create agents/recommendation_agent.py:

from swarm import Agent

def generate_recommendation(context_variables):

sentiment = context_variables["sentiment"]

recommendation = "Buy" if sentiment == "positive" else "Hold"

return f"My recommendation is: {recommendation}"

recommendation_agent = Agent(

name="Recommendation Agent",

instructions="Recommend actions based on the sentiment analysis.",

functions=[generate_recommendation]

)

Step 4: Add a Helper Function for File Loading

Loading data efficiently is a critical part of any project. Here, you’ll create a helper function to streamline the process of reading and loading the earnings report file, making it easier for your agents to access the data.

def load_earnings_report(filepath):

with open(filepath, "r") as file:

return file.read()

Step 5: Tie Everything Together in main.py

With your agents ready, it’s time to tie everything together. In this step, you’ll write the main script that orchestrates the agents, allowing them to work in harmony to analyze and provide insights on the earnings report.

from swarm import Swarm

from agents.summary_agent import summary_agent

from agents.sentiment_agent import sentiment_agent

from agents.recommendation_agent import recommendation_agent

from utils.helpers import load_earnings_report

import os

from dotenv import load_dotenv

# Load environment variables from the .env file

load_dotenv()

# Set the OpenAI API key from the environment variable

os.environ['OPENAI_API_KEY'] = os.getenv('OPENAI_API_KEY')

# Initialize Swarm client

client = Swarm()

# Load earnings report

report_text = load_earnings_report("sample_earnings.txt")

# Run summary agent

response = client.run(

agent=summary_agent,

messages=[{"role": "user", "content": "Summarize the report"}],

context_variables={"report_text": report_text}

)

print(response.messages[-1]["content"])

# Pass summary to sentiment agent

response = client.run(

agent=sentiment_agent,

messages=[{"role": "user", "content": "Analyze the sentiment"}],

context_variables={"report_text": report_text}

)

print(response.messages[-1]["content"])

# Extract sentiment and run recommendation agent

sentiment = response.messages[-1]["content"].split(": ")[-1].strip()

response = client.run(

agent=recommendation_agent,

messages=[{"role": "user", "content": "Give a recommendation"}],

context_variables={"sentiment": sentiment}

)

print(response.messages[-1]["content"])

Step 6: Create a Sample Earnings Report

To test your system, you need data! This step shows you how to create a sample earnings report that your agents can process, ensuring everything is ready for action.

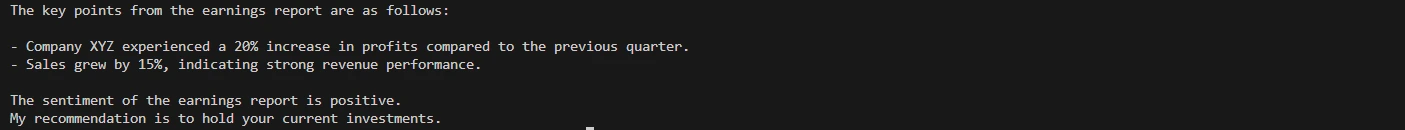

Company XYZ reported a 20% increase in profits compared to the previous quarter.

Sales grew by 15%, and the company expects continued growth in the next fiscal year.

Step 7: Run the Program

Now that everything is set up, it’s time to run the program and watch your multi-agent system in action as it analyzes the earnings report, performs sentiment analysis, and offers recommendations.

python main.pyExpected Output:

Conclusion

We’ve built a multi-agent solution using OpenAI’s Swarm framework to automate the analysis of earnings reports. We can process financial information and offer actionable recommendations with just a few agents. You can easily extend this solution by adding new agents for deeper analysis or integrating real-time financial APIs.

Try it yourself and see how you can enhance it with additional data sources or agents for more advanced analysis!

Key Takeaways

- Modular Architecture: Breaking the system into multiple agents and utilities keeps the code maintainable and scalable.

- Swarm Framework Power: Swarm allows smooth handoffs between agents, making it easy to build complex multi-agent workflows.

- Security via .env: Managing API keys with dotenv ensures that sensitive data isn’t hardcoded into the project.

- This project can expand to handle live financial data by integrating APIs, enabling it to provide real-time recommendations for investors.

Frequently Asked Questions

A. OpenAI’s Swarm is an experimental framework designed for coordinating multiple agents to perform specific tasks. It’s ideal for building modular systems where each agent has a defined role, such as summarizing content, performing sentiment analysis, or generating recommendations.

A. In this tutorial, the multi-agent system consists of three key agents: the Summary Agent, Sentiment Agent, and Recommendation Agent. Each agent performs a specific function like summarizing an earnings report, analyzing its sentiment, or recommending actions based on sentiment.

A. You can store your API key securely in a .env file. This way, the API key is not exposed directly in your code, maintaining security. The .env file can be loaded using the python-dotenv package.

A. Yes, the project can be extended to handle live data by integrating financial APIs. You can create additional agents to fetch real-time earnings reports and analyze trends to provide up-to-date recommendations.

A. Yes, the agents are designed to be modular, so you can reuse them in other projects. You can adapt them to different tasks such as summarizing news articles, performing text sentiment analysis, or making recommendations based on any form of structured data.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.