In a recent presentation for students at the University of Wisconsin-Madison, I encouraged them to expand the scope of their AI usage beyond the stereotypical applications like homework and social media. I started the discussion off with a question: Can AI’s impact be simultaneously over- and under-hyped?

My conclusion is yes, it can be over- and under-hyped. But I reached this conclusion based on a related insight: Our view of AI will be greatly shaped by if or when we’re able to invest the time for do-it-yourself (DIY) AI training and experimentation.

It’s always important to experiment with new technologies, but it’s particularly important with AI. I recently thought about whether my own adoption and value of AI was too limited. I decided to make a commitment to allocate even more time toward DIY experimentation.

Experimenting on our own with technologies like AI can shift your mindset and help you work through the “trough of disillusionment” that accompanies much-hyped technologies, while also driving more value for your customers.

Dig deeper: How to do an AI implementation for your marketing team

Remember your ChatGPT moment

We’ve all experienced AI vertigo over the past two years. For me personally, I experienced fortunate timing relative to ChatGPT’s release.

In late 2022, I made the decision to step away from my corporate leadership role for the longest break I would ever take. I eventually transitioned to consulting and teaching. I, of course, had no idea this break would coincide with ChatGPT’s launch. It’s still fascinating to hear OpenAI actually described it as a “low-key research preview.”

My break meant I had the time for self-directed training, testing and learning. Many friends and colleagues who were still in corporate roles did not have the time for experimentation and were quick to label AI as over-hyped.

Many of these colleagues lack the capacity or leadership aircover to make time for self-training. I was lucky. I attended live events rather than on-demand. I binge listened to any related podcasts. But most importantly, I had time to try things in a DIY mode that convinced me of the value, despite any limitations.

Crossing the AI DIY Chasm

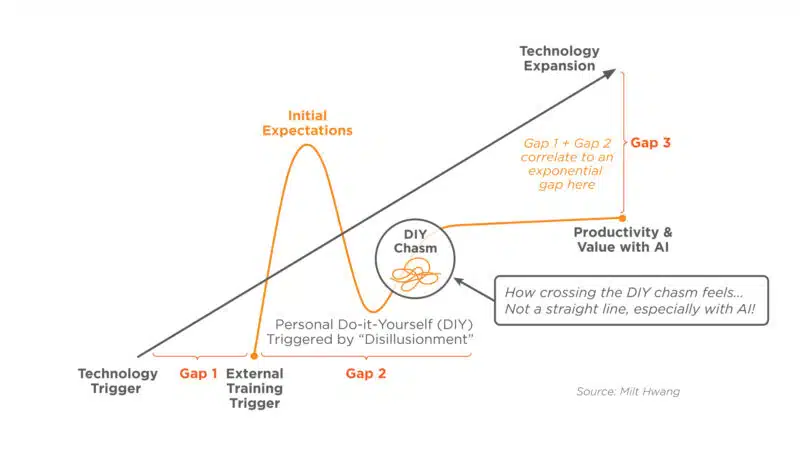

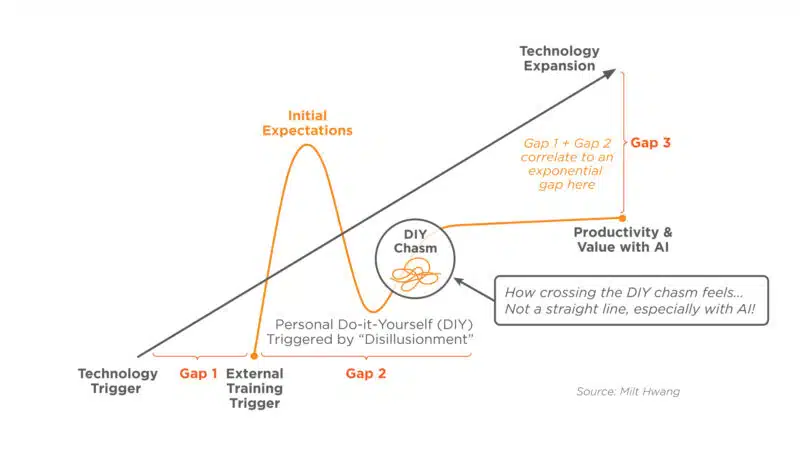

To illustrate the importance of DIY time to creating value, I created my own hype cycle graphic with various training triggers that I believe we all pass through.

Gap 1 is the time between a technology being launched and when you start learning. This is typically based on an external training trigger, through your job or a peer network.

Gap 2 is not only when you leverage the technology, but critically, when you cross what I’m calling the “DIY Chasm.” You have to zig and zag your way through limitations and reach those “A-Ha” moments on your own terms.

The cumulative time between Gap 1 and Gap 2 has an exponential impact on a resulting gap you feel between the personal productivity and value you’re getting from AI and the current capabilities.

In my previous roles, I too would have held similar views about AI being over-hyped. The pressures of managing day-to-day projects meant established patterns for managing martech had set in. Previously, when you got stuck or hit a limit, a quick search produced an answer. But if that knowledge base did not include your specific context, you were stuck. We often blamed the software in these cases.

Because genAI is not constrained to those original limits, further experimentation to help optimize your results is even more critical.

Two years later, I breached the Gap 3 challenge. My work in teaching and consulting is reducing my capacity for DIY experimentation, while at the same time, the pace of change in AI is accelerating. There are some days I don’t feel like I’m behind. But every single time I devote more time to testing, I hit a “wow” moment.

Clearly, I am the limitation, not the AI technology. It’s been helpful to me to remain plugged in to thought leaders that remind us that this is the “worst AI” we’ll ever work with.

More content. Is it any better?

I always credit Scott Brinker with helping shape my “citizen martech” views. I was eager to take a look at the latest MarTech for 2025 report from Brinker and Frans Riemersma. But given my capacity crunch, I only had time for a quick read, not the thorough review I’d prefer. (You’ll find a summary and download links on the MarTech website.)

A quick scan of the report revealed the leading AI use-cases are focused on content and personalization across various stages of ideation and distribution, with the number of mentions of these applications alone telling us the impact of AI is not, in fact, over-hyped.

But the jury is still out on whether customers are feeling improvements. That’s where my latest AI DIY experimentation revealed some insights for the future of personalization.

Dig deeper: Consumers are underwhelmed by AI experiences

Personalized content ‘channels’

I will absolutely go back to read Brinker and Riemersma’s report, which spans more than 100 pages, in full. However, I want to share two AI-infused shortcuts that helped me close the value gap, and simultaneously signal the future of more personalized content.

First, before a recent drive, I loaded Brinker’s chiefmartec articles into ElevenLab’s Reader. The AI-generated voice read the latest articles. If you haven’t tried this, it’s under-hyped, and it will forever change your consumption of long-form content.

Screenshot of ElevenLab’s Reader app.

Next, I loaded the full report into Google’s NotebookLM, and listened to the audio overview it generated.

The audio overview creates an AI-generated “podcast” with two enthusiastic hosts discussing themes from the source material you chose. It allows you to upload documents, YouTube links, web pages and more.

I also created a NotebookLM AI overview for my most recent series of articles for MarTech.

It’s the “source grounding” that makes NotebookLM so powerful, while still taking advantage of the overall model (Gemini) in this mult-modal format. As Steve Johnson, one of NotebookLM’s co-creators, said on the Google Deep Mind Podcast, it is “a kind of personalized AI that is an expert in the information that you care about.”

If you’re interested in NotebookLM, please continue testing more than the audio overview. NotebookLM allowed me to have a “chat” with Brinker and Riemersma through my back and forth with the AI overview.

Screenshot of NotebookLM answering a question using source material.

At this point, I realized I needed to add a key topic to my learning plans for 2025 — the content layer. As discussed by Rasmus Houlind, this includes a chain of multiple content LLMs working together in a martech stack to improve personalization.

I think Houlind would agree with my thoughts on the need for a customer tone, leveraging the data from prior discussions like emails, meetings notes and more, which I proposed in Part 2 of my series.

Our roadmap to personalization

This personalized content discovery is a combination of the overarching trends I’ve written about in 2024. The ROI of crossing the original DIY Chasm two years ago with genAI was well worth it, but it has a shorter lifespan as we go through AI vertigo. I need to plan for more time dedicated to DIY Chasm crossing than ever.

With the help of the personal AI tech infusions I discussed here, I was still able to gain valuable insights despite a time crunch. Thanks to Q&A, engagement with AI and my preferred audio formats, AI helped drive personalization of the content’s key insights, on my own terms.

Now, we just have to prioritize efforts to scale these approaches for our customers.

Contributing authors are invited to create content for MarTech and are chosen for their expertise and contribution to the martech community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.